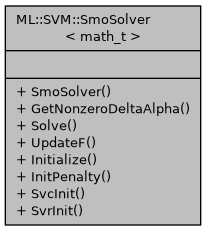

Solve the quadratic optimization problem using two level decomposition and Sequential Minimal Optimization (SMO). More...

#include <smosolver.h>

Public Member Functions | |

| SmoSolver (const raft::handle_t &handle, SvmParameter param, cuvs::distance::kernels::KernelType kernel_type, cuvs::distance::kernels::GramMatrixBase< math_t > *kernel) | |

| void | GetNonzeroDeltaAlpha (const math_t *vec, int n_ws, const int *idx, math_t *nz_vec, int *n_nz, int *nz_idx, cudaStream_t stream) |

| template<typename MatrixViewType > | |

| void | Solve (MatrixViewType matrix, int n_rows, int n_cols, math_t *y, const math_t *sample_weight, math_t **dual_coefs, int *n_support, SupportStorage< math_t > *support_matrix, int **idx, math_t *b, int max_iter=-1, int max_outer_iter=-1, int max_inner_iter=10000) |

| Solve the quadratic optimization problem. More... | |

| void | UpdateF (math_t *f, int n_rows, const math_t *delta_alpha, int n_ws, const math_t *cacheTile) |

| Update the f vector after a block solve step. More... | |

| void | Initialize (math_t **y, const math_t *sample_weight, int n_rows, int n_cols) |

| Initialize the problem to solve. More... | |

| void | InitPenalty (math_t *C_vec, const math_t *sample_weight, int n_rows) |

| void | SvcInit (const math_t *y) |

| Initialize Support Vector Classification. More... | |

| void | SvrInit (const math_t *yr, int n_rows, math_t *yc, math_t *f) |

| Initializes the solver for epsilon-SVR. More... | |

| int | GetNIter () |

Detailed Description

template<typename math_t>

class ML::SVM::SmoSolver< math_t >

Solve the quadratic optimization problem using two level decomposition and Sequential Minimal Optimization (SMO).

The general decomposition idea by Osuna is to choose q examples from all the training examples, and solve the QP problem for this subset (discussed in section 11.2 by Joachims [1]). SMO is the extreme case where we choose q=2.

Here we follow [2] and [3] and use two level decomposition. First we set q_1=1024, and solve the QP sub-problem for that (let's call it QP1). This is the outer iteration, implemented in SmoSolver::Solve.

To solve QP1, we use another decomposition, specifically the SMO (q_2 = 2), which is implemented in SmoBlockSolve.

References:

- [1] Joachims, T. Making large-scale support vector machine learning practical. In B. Scholkopf, C. Burges, & A. Smola (Eds.), Advances in kernel methods: Support vector machines. Cambridge, MA: MIT Press (1998)

- [2] J. Vanek et al. A GPU-Architecture Optimized Hierarchical Decomposition Algorithm for Support VectorMachine Training, IEEE Transactions on Parallel and Distributed Systems, vol 28, no 12, 3330, (2017)

- [3] Z. Wen et al. ThunderSVM: A Fast SVM Library on GPUs and CPUs, Journal of Machine Learning Research, 19, 1-5 (2018)

Constructor & Destructor Documentation

◆ SmoSolver()

|

inline |

Member Function Documentation

◆ GetNIter()

|

inline |

◆ GetNonzeroDeltaAlpha()

| void ML::SVM::SmoSolver< math_t >::GetNonzeroDeltaAlpha | ( | const math_t * | vec, |

| int | n_ws, | ||

| const int * | idx, | ||

| math_t * | nz_vec, | ||

| int * | n_nz, | ||

| int * | nz_idx, | ||

| cudaStream_t | stream | ||

| ) |

◆ Initialize()

| void ML::SVM::SmoSolver< math_t >::Initialize | ( | math_t ** | y, |

| const math_t * | sample_weight, | ||

| int | n_rows, | ||

| int | n_cols | ||

| ) |

Initialize the problem to solve.

Both SVC and SVR are solved as a classification problem. The optimization target (W) does not appear directly in the SMO formulation, only its derivative through f (optimality indicator vector):

\[ f_i = y_i \frac{\partial W }{\partial \alpha_i}. \]

The f_i values are initialized here, and updated at every solver iteration when alpha changes. The update step is the same for SVC and SVR, only the init step differs.

Additionally, we zero init the dual coefficients (alpha), and initialize class labels for SVR.

- Parameters

-

[in,out] y on entry class labels or target values, on exit device pointer to class labels [in] sample_weight sample weights (can be nullptr, otherwise device array of size [n_rows]) [in] n_rows [in] n_cols

◆ InitPenalty()

| void ML::SVM::SmoSolver< math_t >::InitPenalty | ( | math_t * | C_vec, |

| const math_t * | sample_weight, | ||

| int | n_rows | ||

| ) |

◆ Solve()

| void ML::SVM::SmoSolver< math_t >::Solve | ( | MatrixViewType | matrix, |

| int | n_rows, | ||

| int | n_cols, | ||

| math_t * | y, | ||

| const math_t * | sample_weight, | ||

| math_t ** | dual_coefs, | ||

| int * | n_support, | ||

| SupportStorage< math_t > * | support_matrix, | ||

| int ** | idx, | ||

| math_t * | b, | ||

| int | max_iter = -1, |

||

| int | max_outer_iter = -1, |

||

| int | max_inner_iter = 10000 |

||

| ) |

Solve the quadratic optimization problem.

The output arrays (dual_coefs, support_matrix, idx) will be allocated on the device, they should be unallocated on entry.

- Parameters

-

[in] matrix training vectors in matrix format(MLCommon::Matrix::Matrix), size [n_rows x * n_cols] [in] n_rows number of rows (training vectors) [in] n_cols number of columns (features) [in] y labels (values +/-1), size [n_rows] [in] sample_weight device array of sample weights (or nullptr if not applicable) [out] dual_coefs size [n_support] on exit [out] n_support number of support vectors [out] support_matrix support vectors in matrix format, size [n_support, n_cols] [out] idx the original training set indices of the support vectors, size [n_support] [out] b scalar constant for the decision function [in] max_iter maximum number of total iterations (default -1 for no limit) [in] max_outer_iter maximum number of outer iteration (default 100 * n_rows) [in] max_inner_iter maximum number of inner iterations (default 10000)

◆ SvcInit()

| void ML::SVM::SmoSolver< math_t >::SvcInit | ( | const math_t * | y | ) |

Initialize Support Vector Classification.

We would like to maximize the following quantity

\[ W(\mathbf{\alpha}) = -\mathbf{\alpha}^T \mathbf{1} + \frac{1}{2} \mathbf{\alpha}^T Q \mathbf{\alpha}, \]

We initialize f as:

\[ f_i = y_i \frac{\partial W(\mathbf{\alpha})}{\partial \alpha_i} = -y_i + y_j \alpha_j K(\mathbf{x}_i, \mathbf{x}_j) \]

- Parameters

-

[in] y device pointer of class labels size [n_rows]

◆ SvrInit()

| void ML::SVM::SmoSolver< math_t >::SvrInit | ( | const math_t * | yr, |

| int | n_rows, | ||

| math_t * | yc, | ||

| math_t * | f | ||

| ) |

Initializes the solver for epsilon-SVR.

For regression we are optimizing the following quantity

\[ W(\alpha^+, \alpha^-) = \epsilon \sum_{i=1}^l (\alpha_i^+ + \alpha_i^-) - \sum_{i=1}^l yc_i (\alpha_i^+ - \alpha_i^-) + \frac{1}{2} \sum_{i,j=1}^l (\alpha_i^+ - \alpha_i^-)(\alpha_j^+ - \alpha_j^-) K(\bm{x}_i, \bm{x}_j) \]

Then \( f_i = y_i \frac{\partial W(\alpha}{\partial \alpha_i} \) \( = yc_i*epsilon - yr_i \)

Additionally we set class labels for the training vectors.

References: [1] B. Schölkopf et. al (1998): New support vector algorithms, NeuroCOLT2 Technical Report Series, NC2-TR-1998-031, Section 6 [2] A.J. Smola, B. Schölkopf (2004): A tutorial on support vector regression, Statistics and Computing 14, 199–222 [3] Orchel M. (2011) Support Vector Regression as a Classification Problem with a Priori Knowledge in the Form of Detractors, Man-Machine Interactions 2. Advances in Intelligent and Soft Computing, vol 103

- Parameters

-

[in] yr device pointer with values for regression, size [n_rows] [in] n_rows [out] yc device pointer to classes associated to the dual coefficients, size [n_rows*2] [out] f device pointer f size [n_rows*2]

◆ UpdateF()

| void ML::SVM::SmoSolver< math_t >::UpdateF | ( | math_t * | f, |

| int | n_rows, | ||

| const math_t * | delta_alpha, | ||

| int | n_ws, | ||

| const math_t * | cacheTile | ||

| ) |

Update the f vector after a block solve step.

\[ f_i = f_i + \sum_{k\in WS} K_{i,k} * \Delta \alpha_k, \]

where i = [0..n_train-1], WS is the set of workspace indices, and \(K_{i,k}\) is the kernel function evaluated for training vector x_i and workspace vector x_k.

- Parameters

-

f size [n_train] n_rows delta_alpha size [n_ws] n_ws cacheTile kernel function evaluated for the following set K[X,x_ws], size [n_rows, n_ws]

The documentation for this class was generated from the following file:

- src/svm/smosolver.h