#include <decision_forest.hpp>

Public Types | |

| using | forest_type = forest< layout, threshold_t, index_t, metadata_storage_t, offset_t > |

| using | node_type = typename forest_type::node_type |

| using | io_type = typename forest_type::io_type |

| using | threshold_type = threshold_t |

| using | postprocessor_type = postprocessor< io_type > |

| using | categorical_storage_type = typename node_type::index_type |

Public Member Functions | |

| decision_forest () | |

| decision_forest (raft_proto::buffer< node_type > &&nodes, raft_proto::buffer< index_type > &&root_node_indexes, raft_proto::buffer< index_type > &&node_id_mapping, index_type num_features, index_type num_outputs=index_type{2}, bool has_categorical_nodes=false, std::optional< raft_proto::buffer< io_type >> &&vector_output=std::nullopt, std::optional< raft_proto::buffer< typename node_type::index_type >> &&categorical_storage=std::nullopt, index_type leaf_size=index_type{1}, row_op row_postproc=row_op::disable, element_op elem_postproc=element_op::disable, io_type average_factor=io_type{1}, io_type bias=io_type{0}, io_type postproc_constant=io_type{1}) | |

| auto | num_features () const |

| auto | num_trees () const |

| auto | has_vector_leaves () const |

| auto | num_outputs (infer_kind inference_kind=infer_kind::default_kind) const |

| auto | row_postprocessing () const |

| void | set_row_postprocessing (row_op val) |

| auto | elem_postprocessing () const |

| auto | memory_type () |

| auto | device_index () |

| void | predict (raft_proto::buffer< typename forest_type::io_type > &output, raft_proto::buffer< typename forest_type::io_type > const &input, raft_proto::cuda_stream stream=raft_proto::cuda_stream{}, infer_kind predict_type=infer_kind::default_kind, std::optional< index_type > specified_rows_per_block_iter=std::nullopt) |

Static Public Attributes | |

| constexpr static auto const | layout = layout_v |

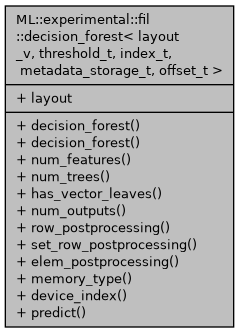

Detailed Description

template<tree_layout layout_v, typename threshold_t, typename index_t, typename metadata_storage_t, typename offset_t>

struct ML::experimental::fil::decision_forest< layout_v, threshold_t, index_t, metadata_storage_t, offset_t >

A general-purpose decision forest implementation

This template provides an optimized but generic implementation of a decision forest. Template parameters are used to specialize the implementation based on the size and characteristics of the forest. For instance, the smallest integer that can express the offset between a parent and child node within a tree is used in order to minimize the size of a node, increasing the number that can fit within the L2 or L1 cache.

- Template Parameters

-

layout_v The in-memory layout of nodes in this forest threshold_t The floating-point type used for quantitative splits index_t The integer type used for storing many things within a forest, including the category value of categorical nodes and the index at which vector output for a leaf node is stored. metadata_storage_t The type used for storing node metadata. The first several bits will be used to store flags indicating various characteristics of the node, and the remaining bits provide the integer index of the feature for this node's split offset_t An integer used to indicate the offset between a node and its most distant child. This type must be large enough to store the largest such offset in the entire forest.

Member Typedef Documentation

◆ categorical_storage_type

| using ML::experimental::fil::decision_forest< layout_v, threshold_t, index_t, metadata_storage_t, offset_t >::categorical_storage_type = typename node_type::index_type |

The type used for storing data on categorical nodes

◆ forest_type

| using ML::experimental::fil::decision_forest< layout_v, threshold_t, index_t, metadata_storage_t, offset_t >::forest_type = forest<layout, threshold_t, index_t, metadata_storage_t, offset_t> |

The type of the forest object which is actually passed to the CPU/GPU for inference

◆ io_type

| using ML::experimental::fil::decision_forest< layout_v, threshold_t, index_t, metadata_storage_t, offset_t >::io_type = typename forest_type::io_type |

The type used for input and output to the model

◆ node_type

| using ML::experimental::fil::decision_forest< layout_v, threshold_t, index_t, metadata_storage_t, offset_t >::node_type = typename forest_type::node_type |

The type of nodes within the forest

◆ postprocessor_type

| using ML::experimental::fil::decision_forest< layout_v, threshold_t, index_t, metadata_storage_t, offset_t >::postprocessor_type = postprocessor<io_type> |

The type used to indicate how leaf output should be post-processed

◆ threshold_type

| using ML::experimental::fil::decision_forest< layout_v, threshold_t, index_t, metadata_storage_t, offset_t >::threshold_type = threshold_t |

The type used for quantitative splits within the model

Constructor & Destructor Documentation

◆ decision_forest() [1/2]

|

inline |

Construct an empty decision forest

◆ decision_forest() [2/2]

|

inline |

Construct a decision forest with the indicated data

- Parameters

-

nodes A buffer containing all nodes within the forest root_node_indexes A buffer containing the index of the root node of every tree in the forest node_id_mapping Mapping to use to convert FIL's internal node ID into Treelite's node ID. Only relevant when predict_type == infer_kind::leaf_id num_features The number of features per input sample for this model num_outputs The number of outputs per row from this model has_categorical_nodes Whether this forest contains any categorical nodes vector_output A buffer containing the output from all vector leaves for this model. Each leaf node will specify the offset within this buffer at which its vector output begins, and leaf_size will be used to determine how many subsequent entries from the buffer should be used to construct the vector output. A value of std::nullopt indicates that this is not a vector leaf model. categorical_storage For models with inputs on too many categories to be stored in the bits of an index_t, it may be necessary to store categorical information external to the node itself. This buffer contains the necessary storage for this information.leaf_size The number of output values per leaf (1 for non-vector leaves; >1 for vector leaves) row_postproc The post-processing operation to be applied to an entire row of the model output elem_postproc The per-element post-processing operation to be applied to the model output average_factor A factor which is used for output normalization bias The bias term that is applied to the output after normalization postproc_constant A constant used by some post-processing operations, including sigmoid, exponential, and logarithm_one_plus_exp

Member Function Documentation

◆ device_index()

|

inline |

The ID of the device on which this model is loaded

◆ elem_postprocessing()

|

inline |

The operation used for postprocessing each element of the output for a single row

◆ has_vector_leaves()

|

inline |

Whether or not leaf nodes have vector outputs

◆ memory_type()

|

inline |

The type of memory (device/host) where the model is stored

◆ num_features()

|

inline |

The number of features per row expected by the model

◆ num_outputs()

|

inline |

The number of outputs per row generated by the model for the given type of inference

◆ num_trees()

|

inline |

The number of trees in the model

◆ predict()

|

inline |

Perform inference with this model

- Parameters

-

[out] output The buffer where the model output should be stored. This must be of size ROWS x num_outputs(). [in] input The buffer containing the input data [in] stream For GPU execution, the CUDA stream. For CPU execution, this optional parameter can be safely omitted. [in] predict_type Type of inference to perform. Defaults to summing the outputs of all trees and produce an output per row. If set to "per_tree", we will instead output all outputs of individual trees. If set to "leaf_id", we will output the integer ID of the leaf node for each tree. [in] specified_rows_per_block_iter If non-nullopt, this value is used to determine how many rows are evaluated for each inference iteration within a CUDA block. Runtime performance is quite sensitive to this value, but it is difficult to predict a priori, so it is recommended to perform a search over possible values with realistic batch sizes in order to determine the optimal value. Any power of 2 from 1 to 32 is a valid value, and in general larger batches benefit from larger values.

◆ row_postprocessing()

|

inline |

The operation used for postprocessing all outputs for a single row

◆ set_row_postprocessing()

|

inline |

Member Data Documentation

◆ layout

|

staticconstexpr |

The in-memory layout of nodes in this forest

The documentation for this struct was generated from the following file:

- include/cuml/experimental/fil/decision_forest.hpp