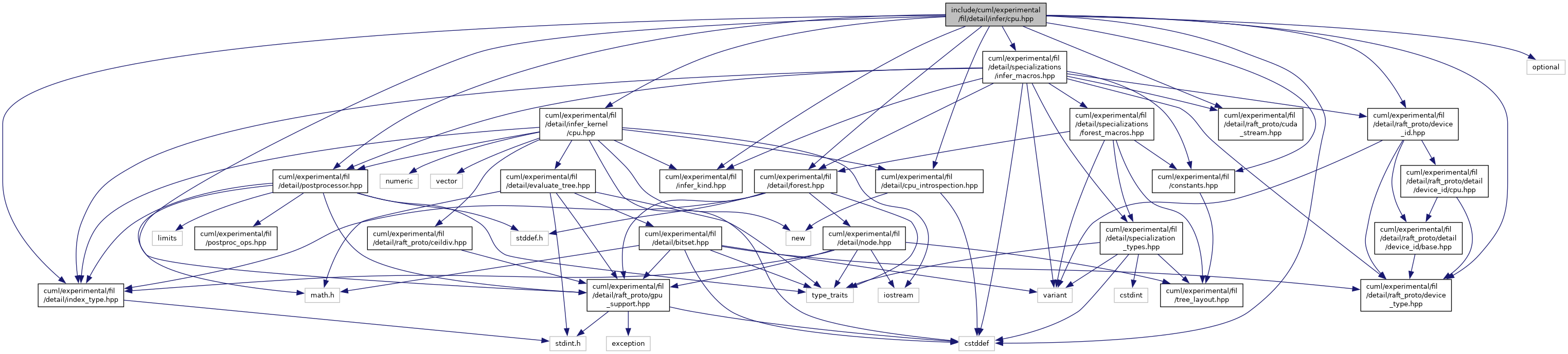

#include <cuml/fil/constants.hpp>#include <cuml/fil/detail/cpu_introspection.hpp>#include <cuml/fil/detail/forest.hpp>#include <cuml/fil/detail/index_type.hpp>#include <cuml/fil/detail/infer_kernel/cpu.hpp>#include <cuml/fil/detail/postprocessor.hpp>#include <cuml/fil/detail/raft_proto/cuda_stream.hpp>#include <cuml/fil/detail/raft_proto/device_id.hpp>#include <cuml/fil/detail/raft_proto/device_type.hpp>#include <cuml/fil/detail/raft_proto/gpu_support.hpp>#include <cuml/fil/detail/specializations/infer_macros.hpp>#include <cuml/fil/infer_kind.hpp>#include <cstddef>#include <optional>

Include dependency graph for cpu.hpp:

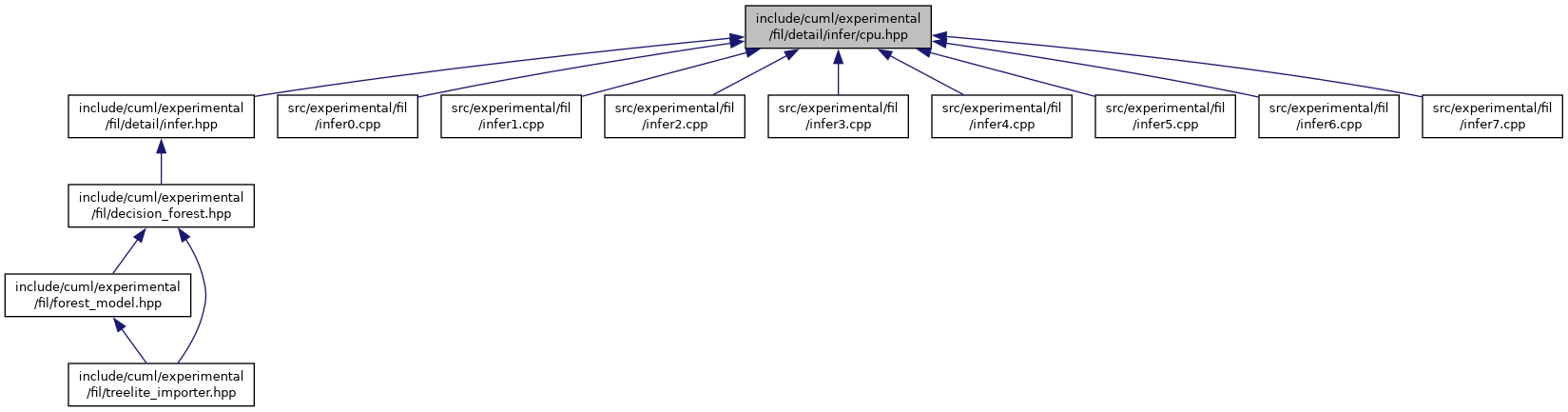

This graph shows which files directly or indirectly include this file:

Go to the source code of this file.

Namespaces | |

| ML | |

| ML::fil | |

| ML::fil::detail | |

| ML::fil::detail::inference | |

Functions | |

| template<raft_proto::device_type D, bool has_categorical_nodes, typename forest_t , typename vector_output_t = std::nullptr_t, typename categorical_data_t = std::nullptr_t> | |

| std::enable_if_t< std::disjunction_v< std::bool_constant< D==raft_proto::device_type::cpu >, std::bool_constant<!raft_proto::GPU_ENABLED > >, void > | ML::fil::detail::inference::infer (forest_t const &forest, postprocessor< typename forest_t::io_type > const &postproc, typename forest_t::io_type *output, typename forest_t::io_type *input, index_type row_count, index_type col_count, index_type output_count, vector_output_t vector_output=nullptr, categorical_data_t categorical_data=nullptr, infer_kind infer_type=infer_kind::default_kind, std::optional< index_type > specified_chunk_size=std::nullopt, raft_proto::device_id< D > device=raft_proto::device_id< D >{}, raft_proto::cuda_stream=raft_proto::cuda_stream{}) |