#include <forest_model.hpp>

|

| | forest_model (decision_forest_variant &&forest=decision_forest_variant{}) |

| |

| auto | num_features () |

| |

| auto | num_outputs () |

| |

| auto | num_trees () |

| |

| auto | has_vector_leaves () |

| |

| auto | row_postprocessing () |

| |

| void | set_row_postprocessing (row_op val) |

| |

| auto | elem_postprocessing () |

| |

| auto | memory_type () |

| |

| auto | device_index () |

| |

| auto | is_double_precision () |

| |

| template<typename io_t > |

| void | predict (raft_proto::buffer< io_t > &output, raft_proto::buffer< io_t > const &input, raft_proto::cuda_stream stream=raft_proto::cuda_stream{}, infer_kind predict_type=infer_kind::default_kind, std::optional< index_type > specified_chunk_size=std::nullopt) |

| |

| template<typename io_t > |

| void | predict (raft_proto::handle_t const &handle, raft_proto::buffer< io_t > &output, raft_proto::buffer< io_t > const &input, infer_kind predict_type=infer_kind::default_kind, std::optional< index_type > specified_chunk_size=std::nullopt) |

| |

| template<typename io_t > |

| void | predict (raft_proto::handle_t const &handle, io_t *output, io_t *input, std::size_t num_rows, raft_proto::device_type out_mem_type, raft_proto::device_type in_mem_type, infer_kind predict_type=infer_kind::default_kind, std::optional< index_type > specified_chunk_size=std::nullopt) |

| |

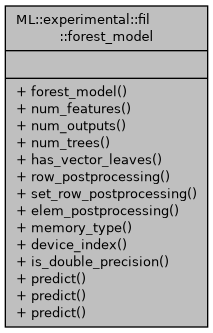

A model used for performing inference with FIL

This struct is a wrapper for all variants of decision_forest supported by a standard FIL build.

◆ forest_model()

◆ device_index()

| auto ML::experimental::fil::forest_model::device_index |

( |

| ) |

|

|

inline |

The ID of the device on which this model is loaded

◆ elem_postprocessing()

| auto ML::experimental::fil::forest_model::elem_postprocessing |

( |

| ) |

|

|

inline |

The operation used for postprocessing each element of the output for a single row

◆ has_vector_leaves()

| auto ML::experimental::fil::forest_model::has_vector_leaves |

( |

| ) |

|

|

inline |

Whether or not leaf nodes use vector outputs

◆ is_double_precision()

| auto ML::experimental::fil::forest_model::is_double_precision |

( |

| ) |

|

|

inline |

Whether or not model is loaded at double precision

◆ memory_type()

| auto ML::experimental::fil::forest_model::memory_type |

( |

| ) |

|

|

inline |

The type of memory (device/host) where the model is stored

◆ num_features()

| auto ML::experimental::fil::forest_model::num_features |

( |

| ) |

|

|

inline |

The number of features per row expected by the model

◆ num_outputs()

| auto ML::experimental::fil::forest_model::num_outputs |

( |

| ) |

|

|

inline |

The number of outputs per row generated by the model

◆ num_trees()

| auto ML::experimental::fil::forest_model::num_trees |

( |

| ) |

|

|

inline |

The number of trees in the model

◆ predict() [1/3]

Perform inference on given input

- Parameters

-

| [out] | output | The buffer where model output should be stored. This must be of size at least ROWS x num_outputs(). |

| [in] | input | The buffer containing input data. |

| [in] | stream | A raft_proto::cuda_stream, which (on GPU-enabled builds) is a transparent wrapper for the cudaStream_t or (on CPU-only builds) a CUDA-free placeholder object. |

| [in] | predict_type | Type of inference to perform. Defaults to summing the outputs of all trees and produce an output per row. If set to "per_tree", we will instead output all outputs of individual trees. If set to "leaf_id", we will output the integer ID of the leaf node for each tree. |

| [in] | specified_chunk_size | Specifies the mini-batch size for processing. This has different meanings on CPU and GPU, but on GPU it corresponds to the number of rows evaluated per inference iteration on a single block. It can take on any power of 2 from 1 to 32, and runtime performance is quite sensitive to the value chosen. In general, larger batches benefit from higher values, but it is hard to predict the optimal value a priori. If omitted, a heuristic will be used to select a reasonable value. On CPU, this argument can generally just be omitted. |

◆ predict() [2/3]

Perform inference on given input

- Parameters

-

| [in] | handle | The raft_proto::handle_t (wrapper for raft::handle_t on GPU) which will be used to provide streams for evaluation. |

| [out] | output | Pointer to the memory location where output should end up |

| [in] | input | Pointer to the input data |

| [in] | num_rows | Number of rows in input |

| [in] | out_mem_type | The memory type (device/host) of the output buffer |

| [in] | in_mem_type | The memory type (device/host) of the input buffer |

| [in] | predict_type | Type of inference to perform. Defaults to summing the outputs of all trees and produce an output per row. If set to "per_tree", we will instead output all outputs of individual trees. If set to "leaf_id", we will output the integer ID of the leaf node for each tree. |

| [in] | specified_chunk_size | Specifies the mini-batch size for processing. This has different meanings on CPU and GPU, but on GPU it corresponds to the number of rows evaluated per inference iteration on a single block. It can take on any power of 2 from 1 to 32, and runtime performance is quite sensitive to the value chosen. In general, larger batches benefit from higher values, but it is hard to predict the optimal value a priori. If omitted, a heuristic will be used to select a reasonable value. On CPU, this argument can generally just be omitted. |

◆ predict() [3/3]

Perform inference on given input

- Parameters

-

| [in] | handle | The raft_proto::handle_t (wrapper for raft::handle_t on GPU) which will be used to provide streams for evaluation. |

| [out] | output | The buffer where model output should be stored. If this buffer is on host while the model is on device or vice versa, work will be distributed across available streams to copy the data back to this output location. This must be of size at least ROWS x num_outputs(). |

| [in] | input | The buffer containing input data. If this buffer is on host while the model is on device or vice versa, work will be distributed across available streams to copy the input data to the appropriate location and perform inference. |

| [in] | predict_type | Type of inference to perform. Defaults to summing the outputs of all trees and produce an output per row. If set to "per_tree", we will instead output all outputs of individual trees. If set to "leaf_id", we will output the integer ID of the leaf node for each tree. |

| [in] | specified_chunk_size | Specifies the mini-batch size for processing. This has different meanings on CPU and GPU, but on GPU it corresponds to the number of rows evaluated per inference iteration on a single block. It can take on any power of 2 from 1 to 32, and runtime performance is quite sensitive to the value chosen. In general, larger batches benefit from higher values, but it is hard to predict the optimal value a priori. If omitted, a heuristic will be used to select a reasonable value. On CPU, this argument can generally just be omitted. |

◆ row_postprocessing()

| auto ML::experimental::fil::forest_model::row_postprocessing |

( |

| ) |

|

|

inline |

The operation used for postprocessing all outputs for a single row

◆ set_row_postprocessing()

| void ML::experimental::fil::forest_model::set_row_postprocessing |

( |

row_op |

val | ) |

|

|

inline |

The documentation for this struct was generated from the following file: