Scaling up Hyperparameter Optimization with NVIDIA DGX Cloud and XGBoost GPU Algorithm#

Choosing an optimal set of hyperparameters is a daunting task, especially for algorithms like XGBoost that have many hyperparameters to tune. In this notebook, we will show how to speed up hyperparameter optimization by running multiple training jobs in parallel on NVIDIA DGX Cloud.

Prerequisites#

See Documentation

Please follow instructions in NVIDIA DGX Cloud (Base Command Platform) to launch a Base Command Platform (BCP) job with RAPIDS.

Note

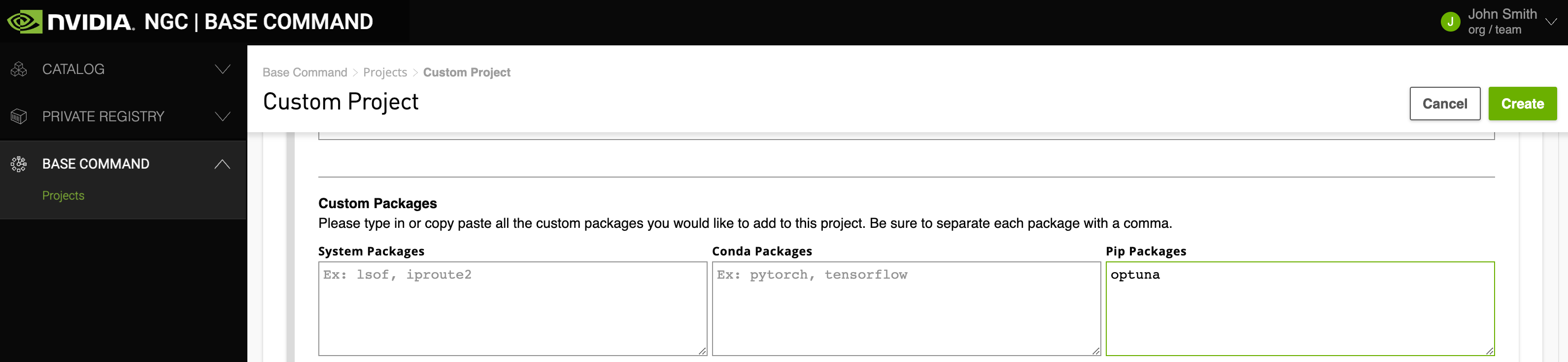

When configuring your cluster ensure you install optuna as we will use it later in the notebook.

Once your cluster is running and you have access to Jupyter save this notebook and run through the cells.

Connect to Dask cluster#

from dask.distributed import Client

client = Client("ws://localhost:8786")

client

Client

Client-e30a2ceb-d856-11ed-826a-8a2d4b69b538

| Connection method: Direct | |

| Dashboard: http://localhost:8787/status |

Scheduler Info

Scheduler

Scheduler-be131a42-88e8-41ec-a14a-1be043f3f2bd

| Comm: ws://100.96.148.133:8786 | Workers: 14 |

| Dashboard: http://100.96.148.133:8787/status | Total threads: 14 |

| Started: 4 minutes ago | Total memory: 800.00 GiB |

Workers

Worker: ws://100.96.148.133:33001

| Comm: ws://100.96.148.133:33001 | Total threads: 1 |

| Dashboard: http://100.96.148.133:38395/status | Memory: 66.67 GiB |

| Nanny: ws://100.96.148.133:34335 | |

| Local directory: /rapids/notebooks/dask-worker-space/worker-ls6hi4kc | |

| GPU: Tesla V100-SXM2-32GB-LS | GPU memory: 32.00 GiB |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 405.94 MiB | Spilled bytes: 0 B |

| Read bytes: 20.35 kiB | Write bytes: 10.48 kiB |

Worker: ws://100.96.148.133:35365

| Comm: ws://100.96.148.133:35365 | Total threads: 1 |

| Dashboard: http://100.96.148.133:33337/status | Memory: 66.67 GiB |

| Nanny: ws://100.96.148.133:44471 | |

| Local directory: /rapids/notebooks/dask-worker-space/worker-29et53zu | |

| GPU: Tesla V100-SXM2-32GB-LS | GPU memory: 32.00 GiB |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 2.0% | Last seen: Just now |

| Memory usage: 404.79 MiB | Spilled bytes: 0 B |

| Read bytes: 16.02 kiB | Write bytes: 10.17 kiB |

Worker: ws://100.96.148.133:35445

| Comm: ws://100.96.148.133:35445 | Total threads: 1 |

| Dashboard: http://100.96.148.133:42335/status | Memory: 66.67 GiB |

| Nanny: ws://100.96.148.133:46039 | |

| Local directory: /rapids/notebooks/dask-worker-space/worker-y9qgj969 | |

| GPU: Tesla V100-SXM2-32GB-LS | GPU memory: 32.00 GiB |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 406.48 MiB | Spilled bytes: 0 B |

| Read bytes: 27.82 kiB | Write bytes: 12.72 kiB |

Worker: ws://100.96.148.133:40871

| Comm: ws://100.96.148.133:40871 | Total threads: 1 |

| Dashboard: http://100.96.148.133:38443/status | Memory: 66.67 GiB |

| Nanny: ws://100.96.148.133:43913 | |

| Local directory: /rapids/notebooks/dask-worker-space/worker-gy9988od | |

| GPU: Tesla V100-SXM2-32GB-LS | GPU memory: 32.00 GiB |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 2.0% | Last seen: Just now |

| Memory usage: 406.17 MiB | Spilled bytes: 0 B |

| Read bytes: 17.70 kiB | Write bytes: 8.42 kiB |

Worker: ws://100.96.148.133:41459

| Comm: ws://100.96.148.133:41459 | Total threads: 1 |

| Dashboard: http://100.96.148.133:43263/status | Memory: 66.67 GiB |

| Nanny: ws://100.96.148.133:39115 | |

| Local directory: /rapids/notebooks/dask-worker-space/worker-d3ao3_xc | |

| GPU: Tesla V100-SXM2-32GB-LS | GPU memory: 32.00 GiB |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 406.29 MiB | Spilled bytes: 0 B |

| Read bytes: 26.71 kiB | Write bytes: 13.11 kiB |

Worker: ws://100.96.148.133:44129

| Comm: ws://100.96.148.133:44129 | Total threads: 1 |

| Dashboard: http://100.96.148.133:36615/status | Memory: 66.67 GiB |

| Nanny: ws://100.96.148.133:41335 | |

| Local directory: /rapids/notebooks/dask-worker-space/worker-w2xb5fce | |

| GPU: Tesla V100-SXM2-32GB-LS | GPU memory: 32.00 GiB |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 2.0% | Last seen: Just now |

| Memory usage: 405.00 MiB | Spilled bytes: 0 B |

| Read bytes: 10.42 kiB | Write bytes: 4.83 kiB |

Worker: ws://100.96.165.66:34765

| Comm: ws://100.96.165.66:34765 | Total threads: 1 |

| Dashboard: http://100.96.165.66:46631/status | Memory: 50.00 GiB |

| Nanny: ws://100.96.165.66:36555 | |

| Local directory: /rapids/notebooks/dask-worker-space/worker-bdtrqsih | |

| GPU: Tesla V100-SXM2-32GB-LS | GPU memory: 32.00 GiB |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 403.67 MiB | Spilled bytes: 0 B |

| Read bytes: 3.77 kiB | Write bytes: 8.26 kiB |

Worker: ws://100.96.165.66:38307

| Comm: ws://100.96.165.66:38307 | Total threads: 1 |

| Dashboard: http://100.96.165.66:33575/status | Memory: 50.00 GiB |

| Nanny: ws://100.96.165.66:42987 | |

| Local directory: /rapids/notebooks/dask-worker-space/worker-lfueidsj | |

| GPU: Tesla V100-SXM2-32GB-LS | GPU memory: 32.00 GiB |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 2.0% | Last seen: Just now |

| Memory usage: 405.98 MiB | Spilled bytes: 0 B |

| Read bytes: 642.2379507483156 B | Write bytes: 1.37 kiB |

Worker: ws://100.96.165.66:39421

| Comm: ws://100.96.165.66:39421 | Total threads: 1 |

| Dashboard: http://100.96.165.66:32905/status | Memory: 50.00 GiB |

| Nanny: ws://100.96.165.66:43779 | |

| Local directory: /rapids/notebooks/dask-worker-space/worker-gkndrj_h | |

| GPU: Tesla V100-SXM2-32GB-LS | GPU memory: 32.00 GiB |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 2.0% | Last seen: Just now |

| Memory usage: 410.18 MiB | Spilled bytes: 0 B |

| Read bytes: 2.87 kiB | Write bytes: 5.58 kiB |

Worker: ws://100.96.165.66:39479

| Comm: ws://100.96.165.66:39479 | Total threads: 1 |

| Dashboard: http://100.96.165.66:33237/status | Memory: 50.00 GiB |

| Nanny: ws://100.96.165.66:37105 | |

| Local directory: /rapids/notebooks/dask-worker-space/worker-0g5qv_br | |

| GPU: Tesla V100-SXM2-32GB-LS | GPU memory: 32.00 GiB |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 2.0% | Last seen: Just now |

| Memory usage: 408.19 MiB | Spilled bytes: 0 B |

| Read bytes: 4.38 kiB | Write bytes: 9.44 kiB |

Worker: ws://100.96.165.66:41755

| Comm: ws://100.96.165.66:41755 | Total threads: 1 |

| Dashboard: http://100.96.165.66:46327/status | Memory: 50.00 GiB |

| Nanny: ws://100.96.165.66:44857 | |

| Local directory: /rapids/notebooks/dask-worker-space/worker-3bxagf78 | |

| GPU: Tesla V100-SXM2-32GB-LS | GPU memory: 32.00 GiB |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 4.0% | Last seen: Just now |

| Memory usage: 406.00 MiB | Spilled bytes: 0 B |

| Read bytes: 3.13 kiB | Write bytes: 6.71 kiB |

Worker: ws://100.96.165.66:42253

| Comm: ws://100.96.165.66:42253 | Total threads: 1 |

| Dashboard: http://100.96.165.66:45909/status | Memory: 50.00 GiB |

| Nanny: ws://100.96.165.66:43073 | |

| Local directory: /rapids/notebooks/dask-worker-space/worker-o1u0hz8e | |

| GPU: Tesla V100-SXM2-32GB-LS | GPU memory: 32.00 GiB |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 405.79 MiB | Spilled bytes: 0 B |

| Read bytes: 0.0 B | Write bytes: 0.0 B |

Worker: ws://100.96.165.66:45377

| Comm: ws://100.96.165.66:45377 | Total threads: 1 |

| Dashboard: http://100.96.165.66:42355/status | Memory: 50.00 GiB |

| Nanny: ws://100.96.165.66:33433 | |

| Local directory: /rapids/notebooks/dask-worker-space/worker-ycv933o4 | |

| GPU: Tesla V100-SXM2-32GB-LS | GPU memory: 32.00 GiB |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 0.0% | Last seen: Just now |

| Memory usage: 405.77 MiB | Spilled bytes: 0 B |

| Read bytes: 1.26 kiB | Write bytes: 2.75 kiB |

Worker: ws://100.96.165.66:46819

| Comm: ws://100.96.165.66:46819 | Total threads: 1 |

| Dashboard: http://100.96.165.66:33287/status | Memory: 50.00 GiB |

| Nanny: ws://100.96.165.66:46089 | |

| Local directory: /rapids/notebooks/dask-worker-space/worker-2h50aat7 | |

| GPU: Tesla V100-SXM2-32GB-LS | GPU memory: 32.00 GiB |

| Tasks executing: 0 | Tasks in memory: 0 |

| Tasks ready: 0 | Tasks in flight: 0 |

| CPU usage: 2.0% | Last seen: Just now |

| Memory usage: 406.49 MiB | Spilled bytes: 0 B |

| Read bytes: 2.51 kiB | Write bytes: 5.33 kiB |

n_workers = len(client.scheduler_info()["workers"])

Perform hyperparameter optimization with a toy example#

Now we can run hyperparameter optimization. The workers will run multiple training jobs in parallel.

def objective(trial):

x = trial.suggest_uniform("x", -10, 10)

return (x - 2) ** 2

import optuna

from dask.distributed import wait

# Number of hyperparameter combinations to try in parallel

n_trials = 100

# Optimize in parallel on your Dask cluster

backend_storage = optuna.storages.InMemoryStorage()

dask_storage = optuna.integration.DaskStorage(storage=backend_storage, client=client)

study = optuna.create_study(direction="minimize", storage=dask_storage)

futures = []

for i in range(0, n_trials, n_workers * 4):

iter_range = (i, min([i + n_workers * 4, n_trials]))

futures.append(

{

"range": iter_range,

"futures": [

client.submit(study.optimize, objective, n_trials=1, pure=False)

for _ in range(*iter_range)

],

}

)

for partition in futures:

iter_range = partition["range"]

print(f"Testing hyperparameter combinations {iter_range[0]}..{iter_range[1]}")

_ = wait(partition["futures"])

/tmp/ipykernel_618/3307148639.py:9: ExperimentalWarning: DaskStorage is experimental (supported from v3.1.0). The interface can change in the future.

dask_storage = optuna.integration.DaskStorage(storage=backend_storage, client=client)

Testing hyperparameter combinations 0..56

Testing hyperparameter combinations 56..100

study.best_params

{'x': 1.9717191009722854}

study.best_value

0.0007998092498157874

Perform hyperparameter optimization with XGBoost GPU algorithm#

Now let’s try optimizing hyperparameters for an XGBoost model.

import xgboost as xgb

from optuna.samplers import RandomSampler

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import KFold, cross_val_score

def objective(trial):

X, y = load_breast_cancer(return_X_y=True)

params = {

"n_estimators": 10,

"verbosity": 0,

"tree_method": "gpu_hist",

# L2 regularization weight.

"lambda": trial.suggest_float("lambda", 1e-8, 100.0, log=True),

# L1 regularization weight.

"alpha": trial.suggest_float("alpha", 1e-8, 100.0, log=True),

# sampling according to each tree.

"colsample_bytree": trial.suggest_float("colsample_bytree", 0.2, 1.0),

"max_depth": trial.suggest_int("max_depth", 2, 10, step=1),

# minimum child weight, larger the term more conservative the tree.

"min_child_weight": trial.suggest_float(

"min_child_weight", 1e-8, 100, log=True

),

"learning_rate": trial.suggest_float("learning_rate", 1e-8, 1.0, log=True),

# defines how selective algorithm is.

"gamma": trial.suggest_float("gamma", 1e-8, 1.0, log=True),

"grow_policy": "depthwise",

"eval_metric": "logloss",

}

clf = xgb.XGBClassifier(**params)

fold = KFold(n_splits=5, shuffle=True, random_state=0)

score = cross_val_score(clf, X, y, cv=fold, scoring="neg_log_loss")

return score.mean()

# Number of hyperparameter combinations to try in parallel

n_trials = 1000

# Optimize in parallel on your Dask cluster

backend_storage = optuna.storages.InMemoryStorage()

dask_storage = optuna.integration.DaskStorage(storage=backend_storage, client=client)

study = optuna.create_study(

direction="maximize", sampler=RandomSampler(seed=0), storage=dask_storage

)

futures = []

for i in range(0, n_trials, n_workers * 4):

iter_range = (i, min([i + n_workers * 4, n_trials]))

futures.append(

{

"range": iter_range,

"futures": [

client.submit(study.optimize, objective, n_trials=1, pure=False)

for _ in range(*iter_range)

],

}

)

for partition in futures:

iter_range = partition["range"]

print(f"Testing hyperparameter combinations {iter_range[0]}..{iter_range[1]}")

_ = wait(partition["futures"])

/tmp/ipykernel_618/4264989174.py:6: ExperimentalWarning: DaskStorage is experimental (supported from v3.1.0). The interface can change in the future.

dask_storage = optuna.integration.DaskStorage(storage=backend_storage, client=client)

Testing hyperparameter combinations 0..56

Testing hyperparameter combinations 56..112

Testing hyperparameter combinations 112..168

Testing hyperparameter combinations 168..224

Testing hyperparameter combinations 224..280

Testing hyperparameter combinations 280..336

Testing hyperparameter combinations 336..392

Testing hyperparameter combinations 392..448

Testing hyperparameter combinations 448..504

Testing hyperparameter combinations 504..560

Testing hyperparameter combinations 560..616

Testing hyperparameter combinations 616..672

Testing hyperparameter combinations 672..728

Testing hyperparameter combinations 728..784

Testing hyperparameter combinations 784..840

Testing hyperparameter combinations 840..896

Testing hyperparameter combinations 896..952

Testing hyperparameter combinations 952..1000

study.best_params

{'lambda': 1.9471539598103378,

'alpha': 1.1141784696858766e-08,

'colsample_bytree': 0.7422532294369841,

'max_depth': 4,

'min_child_weight': 0.2248745054413427,

'learning_rate': 0.4983200494234886,

'gamma': 9.77293810275356e-07}

study.best_value

-0.10351123544715746

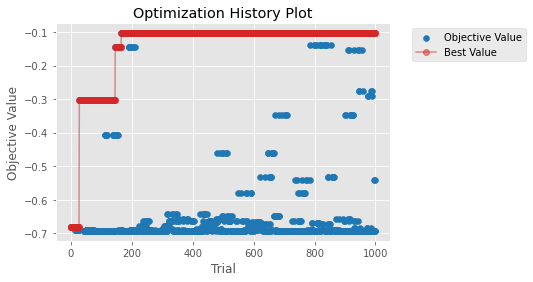

Let’s visualize the progress made by hyperparameter optimization.

from optuna.visualization.matplotlib import (

plot_optimization_history,

plot_param_importances,

)

plot_optimization_history(study)

/tmp/ipykernel_618/3324289224.py:1: ExperimentalWarning: plot_optimization_history is experimental (supported from v2.2.0). The interface can change in the future.

plot_optimization_history(study)

<AxesSubplot:title={'center':'Optimization History Plot'}, xlabel='Trial', ylabel='Objective Value'>

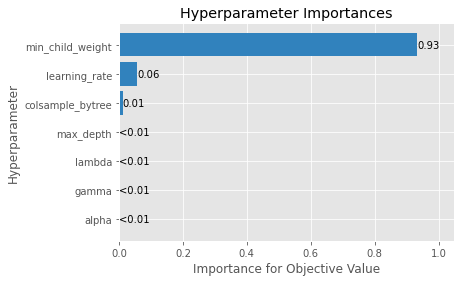

plot_param_importances(study)

/tmp/ipykernel_618/3836449081.py:1: ExperimentalWarning: plot_param_importances is experimental (supported from v2.2.0). The interface can change in the future.

plot_param_importances(study)

<AxesSubplot:title={'center':'Hyperparameter Importances'}, xlabel='Importance for Objective Value', ylabel='Hyperparameter'>