HPO Benchmarking with RAPIDS and Dask#

Hyper-Parameter Optimization (HPO) helps to find the best version of a model by exploring the space of possible configurations. While generally desirable, this search is computationally expensive and time-consuming.

In the notebook demo below, we compare benchmarking results to show how GPU can accelerate HPO tuning jobs relative to CPU.

For instance, we find a 48x speedup in wall clock time (0.71 hrs vs 34.6 hrs) for XGBoost and 16x (3.86 hrs vs 63.2 hrs) for RandomForest when comparing between p3.8xlarge Tesla V100 GPUs and c5.24xlarge CPU EC2 instances on 100 HPO trials of the 3-year Airline Dataset.

Preamble

You can set up local environment but it is recommended to launch a Virtual Machine service (Azure, AWS, GCP, etc).

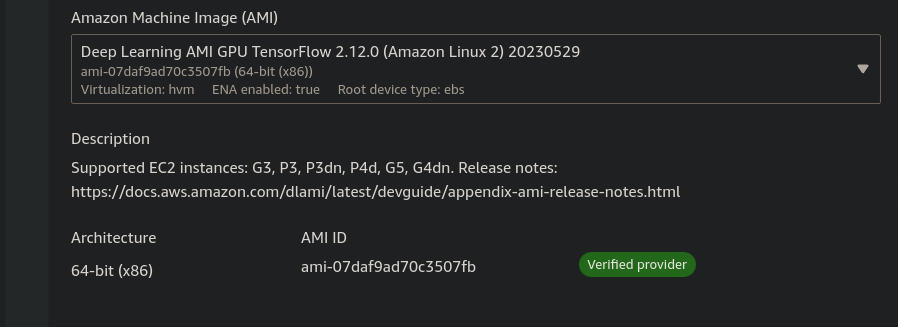

For the purposes of this notebook, we will be utilizing the Amazon Machine Image (AMI) as the starting point.

See Documentation

Please follow instructions in AWS Elastic Cloud Compute) to launch an EC2 instance with GPUs, the NVIDIA Driver and the NVIDIA Container Runtime.

Note

When configuring your instance ensure you select the Deep Learning AMI GPU TensorFlow or PyTorch in the AMI selection box under “Amazon Machine Image (AMI)”

Once your instance is running and you have access to Jupyter save this notebook and run through the cells.

Python ML Workflow

In order to work with RAPIDS container, the entrypoint logic should parse arguments, load, preprocess and split data, build and train a model, score/evaluate the trained model, and emit an output representing the final score for the given hyperparameter setting.

Let’s have a step-by-step look at each stage of the ML workflow:

Dataset

We leverage the Airline dataset, which is a large public tracker of US domestic flight logs which we offer in various sizes (1 year, 3 year, and 10 year) and in Parquet (compressed column storage) format. The machine learning objective with this dataset is to predict whether flights will be more than 15 minutes late arriving to their destination.

We host the demo dataset in public S3 demo buckets in both the us-east-1 or us-west-2. To optimize performance, we recommend that you access the s3 bucket in the same region as your EC2 instance to reduce network latency and data transfer costs.

For this demo, we are using the 3_year dataset, which includes the following features to mention a few:

Date and distance ( Year, Month, Distance )

Airline / carrier ( Flight_Number_Reporting_Airline )

Actual departure and arrival times ( DepTime and ArrTime )

Difference between scheduled & actual times ( ArrDelay and DepDelay )

Binary encoded version of late, aka our target variable ( ArrDelay15 )

Configure aws credentials for access to S3 storage

aws configure

Download dataset from S3 bucket to your current working dir

aws s3 cp --recursive s3://sagemaker-rapids-hpo-us-west-2/3_year/ ./data/

Algorithm

From a ML/algorithm perspective, we offer XGBoost and RandomForest. You are free to switch between these algorithm choices and everything in the example will continue to work.

parser = argparse.ArgumentParser()

parser.add_argument(

"--model-type", type=str, required=True, choices=["XGBoost", "RandomForest"]

)

We can also optionally increase robustness via reshuffles of the train-test split (i.e., cross-validation folds). Typical values are between 3 and 10 folds. We will use

n_cv_folds = 5

Dask Cluster

To maximize on efficiency, we launch a Dask LocalCluster for cpu or LocalCUDACluster that utilizes GPUs for distributed computing. Then connect a Dask Client to submit and manage computations on the cluster.

We can then ingest the data, and “persist” it in memory using dask as follows:

if args.mode == "gpu":

cluster = LocalCUDACluster()

else: # mode == "cpu"

cluster = LocalCluster(n_workers=os.cpu_count())

with Client(cluster) as client:

dataset = ingest_data(mode=args.mode)

client.persist(dataset)

Search Range

One of the most important choices when running HPO is to choose the bounds of the hyperparameter search process. In this notebook, we leverage the power of Optuna, a widely used Python library for hyperparameter optimization.

Here’s the quick steps on getting started with Optuna:

Define the Objective Function, which represents the model training and evaluation process. It takes hyperparameters as inputs and returns a metric to optimize (e.g, accuracy in our case,). Refer to

train_xgboost()andtrain_randomforest()inhpo.py

Specify the search space using the

Trialobject’s methods to define the hyperparameters and their corresponding value ranges or distributions. For example:

"max_depth": trial.suggest_int("max_depth", 4, 8),

"max_features": trial.suggest_float("max_features", 0.1, 1.0),

"learning_rate": trial.suggest_float("learning_rate", 0.001, 0.1, log=True),

"min_samples_split": trial.suggest_int("min_samples_split", 2, 1000, log=True),

Create an Optuna study object to keep track of trials and their corresponding hyperparameter configurations and evaluation metrics.

study = optuna.create_study(

sampler=RandomSampler(seed=args.seed), direction="maximize"

)

Select an optimization algorithm to determine how Optuna explores and exploits the search space to find optimal configurations. For instance, the

RandomSampleris an algorithm provided by the Optuna library that samples hyperparameter configurations randomly from the search space.Run the Optimization by calling the Optuna’s

optimize()function on the study object. You can specify the number of trials or number of parallel jobs to run.

study.optimize(lambda trial: train_xgboost(

trial, dataset=dataset, client=client, mode=args.mode

),

n_trials=100,

n_jobs=1,

)

Build RAPIDS Container

Now that we have a fundamental understanding of our workflow process, we can test the code. First make sure you have the correct CUDAtoolkit version withnvidia smi command.

Then starting with latest rapids docker image, we only need to install optuna as the container comes with most necessary packages.

FROM rapidsai/rapidsai:23.06-cuda11.8-runtime-ubuntu22.04-py3.10

RUN mamba install -y -n rapids optuna

The build step will be dominated by the download of the RAPIDS image (base layer). If it’s already been downloaded the build will take less than 1 minute.

docker build -t rapids-tco-benchmark:v23.06 .

Executing benchmark tests can be an arduous and time-consuming procedure that may extend over multiple days. By using a tool like tmux, you can maintain active terminal sessions, ensuring that your tasks continue running even if the SSH connection is interrupted.

tmux

When starting the container, be sure to expose the --gpus all flag to make all available GPUs on the host machine accessible within the Docker environment. Use -v (or--volume) option to mount working dir from the host machine into the docker container. This enables data or directories on the host machine to be accessible within the container, and any changes made to the mounted files or directories will be reflected in both the host and container environments.

Optional to expose jupyter via ports 8786-8888.

docker run -it --gpus all -p 8888:8888 -p 8787:8787 -p 8786:8786 -v \

/home/ec2-user/tco_hpo_gpu_cpu_perf_benchmark:/rapids/notebooks/host \

rapids-tco-benchmark:v23.06

Run HPO

Navigate to the host directory inside the container and run the python training script with the following command :

python ./hpo.py --model-type "XGBoost" --mode "gpu" > xgboost_gpu.txt 2>&1

The code above will run XGBoost HPO jobs on the gpu and output the benchmark results to a text file. You can run the same for RandomForest by changing --model type and --mode args to RandomForest and cpu respectively.